Engineering Insights

In the last post, we recapped the emergence of C as the dominant programming language for embedded automotive systems, from its roots in Unix in the early 1970s up to gathering criticism around its relative eccentricities as the platform matured. We also covered the emergence of safety standards ISO 26262 and IEC 61508, as well as the launch of MISRA C.

In this post, we’ll examine the scope that C gives a developer to generate problematic code. We’ll also outline the kind of rigor and mindset necessary to safely harness the power of the language, and avoid those conflicts of intent and execution which have garnered C some critics, from a safety standpoint, over the last few decades.

It may not be immediately clear what exactly constitutes a safety-related risk in the context of safety-critical embedded software. Here there are a couple of unusual considerations:

As long as the programming language is documented, everything should be clear, leaving the developer free to write code under a due regimen of quality checks. But developing safety-critical software puts the developer in the unusual position of having limited access to their own toolbox; there are still areas of the ANSI C standard that are not fully specified, and therefore must remain out-of-bounds. Consequently, different compilers may follow different implementation strategies, which can lead to unexpected behavior across platforms.

In addition to this, some language constructs are simply more prone to human error than others — a factor which I’ll investigate in the next post in this series.

Language features with undefined behavior

C is very flexible, permitting a wide range of operations that are valid from a syntax perspective, but which could make the compiled program dangerously unstable if used in the wrong way. Certainly, people like Dennis Ritchie and Brian Kernighan were betting hard on the professionalism and competence of software developers when they offered such sharp knives to them.

The same applies to the compiler vendors, as regards the optimization of generated binaries. In order to produce very efficient binary code, most C compilers do not provide ‘sanity checks’ to the code generation process, but rather rely on the developer deploying the code in a context (and within set boundaries) appropriate to its design.

The possibility of undefined behavior in C-based projects is minimized in at least two important ways. Firstly, by taking the responsibility to write all meaningful safety checks directly into the routines; and secondly, by enabling the compiler’s warning levels, and by using static analyzers to automatically identify risk-laden code segments that might have slipped by the developer.

Let’s discuss a few of the essential rules that even the most seasoned C practitioner can lose touch with during the organized chaos of a large project.

Using an uninitialized variable

Unlike many other programming languages, declaring a variable in C does not imply having it filled with a default value like 0, empty string, null or whatever else a software developer might imagine; a C variable is just an address to a memory area with unknown content.

Even C beginners know this rule, but far more mature developers fall foul of its consequences due to inattention or hard to read too complex code, as their project grows.

Bugs that result from uninitialized variables can be very hard to discover through testing, since functional failures might be randomly reproduced, depending on the memory state behind them.

Such anomalies can not only survive the debugging process but can even get compounded by further structural conflicts. Take the code below, for example:

void turnWheel(void) {

bool bDirection;

if (true == bDirection) {

turnWheelLeft();

}

if (false == bDirection) {

turnWheelRight();

}

}At first glance you might think that the wheel will turn either to the left or the right; but closer inspection reveals that bDirection can receive a random integer that is different from the equivalent integer values of true or false, meaning that the car will keep going straight forward!

As an added bonus, a compiler could potentially abandon the logic of the code after these uninitialized variables and decide to take both conditionals as true (or false), just to reduce the number of branches! Let’s hope that such a scenario never appears in a functional safety-qualified compiler.

Luckily, compilers and third-party static analyzers support the detection of uninitialized variables. Data flow analysis is a critical aspect in the development of functional safety-related applications, so enabling the corresponding warning levels in static analyzers is one of the first things to check off in a project.

Accessing out-of-bounds elements

Arrays are another area where the programmer needs to pay close attention to scope and methodology. Defining a C array opens the gates to out-of-bounds indexed accesses to it, either by specifying negative indexes or by exceeding the array size.

Out-of-bounds read accesses are less ‘undefined’ (if we can use this term) since they will either read meaningless data or will generate an exception when trying to access invalid memory space — but at least the control flow can be anticipated.

Out-of-bounds writes are worse, since they can randomly change data referenced by other variables in an unpredictable way. Furthermore, when such write attempts start to touch stack frames, they can also change CPU return instruction pointers that tell the CPU to decode and execute instructions from unrelated memory areas.

Division by zero

Division by zero does not work — everyone knows this. So why is it worth discussing, when the behavior is well-defined and it’s known that attempting this calculation will generate nothing worse than a crash?

(Though it’s a topic for another time, it’s worth noting that a dumb crash of the compiled application may not be enough to preserve the safe state of the host system)

Take this snippet:

void operation(uint32_t u32Distance, uint32_t u32Time) {

uint32_t u32Speed = 0;

safetyCriticalAction();

u32Speed = u32Distance / u32Time;

// do something with the speed

….

} Based on the code above, we might be pretty confident that safetyCriticalAction() will always be called since the division by zero should appear only after the function returns.

However, the anticipated crash is not controlled by the compiler, but by the hardware and the host operating system. The arithmetic units on many CPUs are able to detect the situation and signal an exception to the operating system, which will most likely terminate the application.

But don’t be surprised if that isn’t what actually happens. The compiler doesn’t care about the problem (since there’s no dependency between u32Speed and the called function) and might decide to generate the binary code for the division before the critical call.

An even trickier situation emerges in the case of CPUs that feature out-of-order execution. They have internal optimizers for the execution flows based on data dependency, similar to compilers; so even if the compiler preserves the order of the instructions defined by the C code, it’s possible that instruction reordering could still occur in the CPU.

Arithmetic or Logical Overflows

C doesn’t define behavior for overflow operations, which are most likely to be hardware-specific or driven by a compiler’s internal algorithms.

Look at the piece of code below:

{

int32_t s32N = INT_MAX;

printf("s32N = %d\n", s32N);

printf("s32N+1 = %d\n", s32N+1);

printf("(s32N < s32N +1) = %d\n", s32N < s32N +1);

printf("s32N<<(-1) = %x\n", s32N<<(-1));

printf("s32N<<32 = %x\n", s32N<<32);

printf("s32N>>32 = %x\n", s32N>>32);

}We might expect results based on a common-sense extrapolation of what we learned about the logical operations of CPUs, assuming that INT_MAX + 1 == INT_MIN, or zero whipped out the results on any shift operation with a count equal or higher to the width of the type. Though such issues can be anticipated, it’s better to avoid any such ‘out-of-standard’ scenarios in the first place, rather than relying on platform-specific behavior.

Compiler optimization algorithms

Compilers should not be considered as a separate source of undefined behavior. Those qualified for use in functionally safe applications (such as in the embedded automotive sector) are expected to be stable and adherent to a standard C definition.

But in order to be able to generate optimized binaries, compilers have a license to consider all those code segments undefined by the C standard as potential areas of optimization. Undefined behaviors can therefore end up as a kind of wildcard for compiler writers who, with the aim of squeezing the most from each CPU tick, might deliberately allow the generation of messy code for undefined behavior scenarios, if this will provide less branching and better data or code locality for the valid and most probable execution paths.

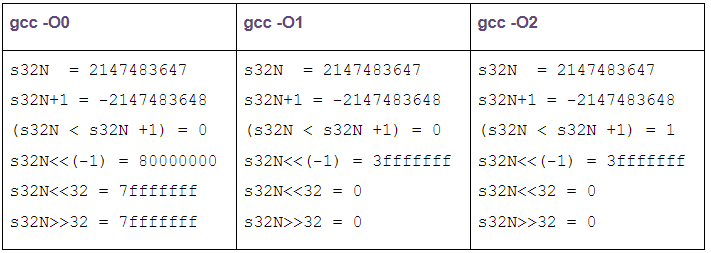

To give you an idea of the possible problems that compiler optimizations can cause, compiling the code example above on arithmetic and logical overflows with GCC v5.4 with different optimization flags (-O0, -O1, -O2) will return different results when executed on Linux.

As you can see, in the case of -O2 usage, despite the fact that s32N is evaluated to INT_MAXand (s32N+1) is evaluated to INT_MIN, (s32N < s32N +1) is aggressively hardcoded to true since this should be the general correct value in all cases; except the one considered here, which, being under the umbrella of ‘undefined behavior’, is allowed to return incorrect results.

Equally interesting variations can also be observed for shifting operations.

In the next post in this series, we’ll examine some of the key areas which require particular vigilance in C-based embedded projects.